Community

Share your experience!

- Community

- Audio

- Discover Sony

- Let’s talk about: AI sound separation technology

Let’s talk about: AI sound separation technology

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

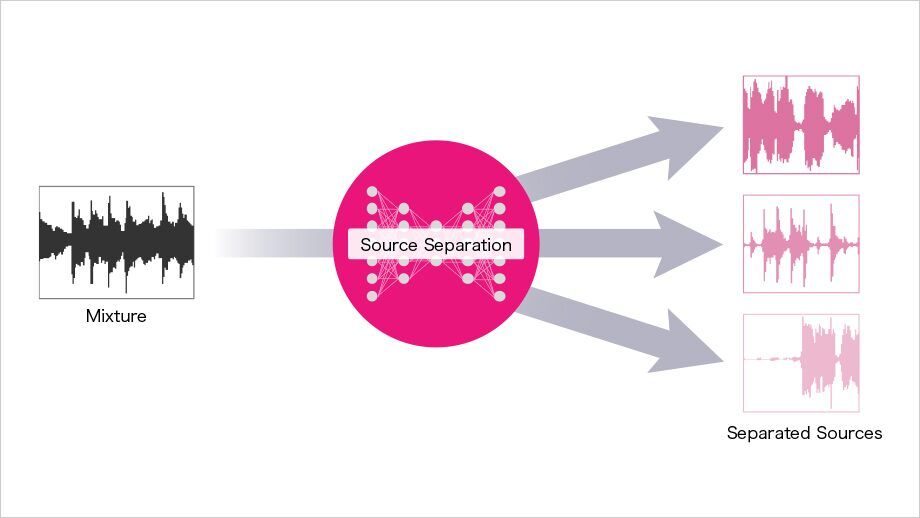

Sound separation is a technology that makes it possible to extract individual sounds from a mixed audio source. This was originally considered to be an incredibly difficult thing to accomplish, but in 2013, we incorporated Sony’s AI technology, which has allowed us to dramatically improve the performance. Results have already been achieved, for example, in reviving classic movies, eliminating noise on smartphones, and allowing real-time karaoke for music streaming services, and we expect it will be applied to an even wider variety of fields in the future.

Here, Yuki Mitsufuji from our Tokyo R&D Center and Stefan Uhlich from our Stuttgart R&D Center tell us more.

Recreating human abilities using machines.

“When humans listen to a performance where multiple sounds are mixed together, we are able to distinguish individual instruments, or we can naturally focus on a single voice when having a conversation, even when surrounded by a large crowd,” Yuki explains. Until AI technology was utilised, this was extremely difficult to do using computers. “Some people described this task as mixing two juices and extracting one of them afterwards,” he recalls.

In the demo below, you can listen to three examples of our sound separation technology applied to a scene from Lawrence of Arabia, where we demonstrate how we can extract the dialogue, as well as various foley sounds.

AI sound separation works by teaching computers to fulfil the task.

Take a guitar as an example. This instrument has a very specific sound, or frequency, that is learned by the neural network during training.

“In this training, the network sees a lot of music – more music than we will ever hear in our lifetime – together with the target sound that we should extract,” Stefan explains. Therefore, regardless of how many different sounds are mixed together in a recording, our AI system is able to identify the particular characteristics of the guitar and extract them.

“It is just like how we can spot an apple when we see one because we have seen many of them before,” Yuki surmises. “AI is applied to sound separation in much the same way, both mechanically and conceptually.”

This technology can almost rewind time.

Using AI sound separation technology, we can revisit old songs, extract the vocals or separate the instruments, and remix the track. And for movies, it opens a whole new potential for immersive entertainment.

“In order to provide an immersive sound field to those watching movies, it is necessary to deliver sounds from a number of different angles and recreate a 3D audio space,” Stefan clarifies. “However, classic movies have the dialogue and sound effects on the same track, and so there has been a limit to what we can extract, and how immersive we can make the sound field. We started wondering if we could extend our technology to movies, and after learning from a sound effect (foley) library, our AI system was able to successfully extract individual sound effects from the master copy.”

You can see this in practice in the Lawrence of Arabia video above.

And then there are fields that may not immediately spring to mind when thinking about sound separation technology, but which certainly rely on it.

Yuki turns to aibo, Sony’s robotic dog. “aibo can respond to human voices and communicate, but if aibo simply gathers the surrounding sounds, noises such as aibo’s own mechanical sounds or wind noise will also be picked up. By using AI sound separation to extract human voices and remove all other background sounds, we have been able to improve its voice recognition capabilities.”

We’ve applied similar methods to our other products too. For example, Xperia™ smartphone customers enjoy clear human voices without wind disturbance, and our ‘karaoke mode’ technology developed for a music streaming app removes vocals in real-time to allow the user’s voice to mix with the sound source.

Looking to the future.

As Sony PSL and Sony Music Solutions start to offer this technology externally, Yuki is looking forward to what’s to come. “We hope that our technology will be like a time machine that allows artists from the past and present to collaborate across time.”

And for Stefan, he is looking forward to seeing the technology expand even further. “From a technological point of view, we will see the transition to universal source separation where not only the number of sources is unknown but also the source types are unspecified,” he tells us. “People recognised this as a challenging but interesting scenario, which will enable even more commercial use cases.”

We can’t wait to see what new grounds AI sound separation can help us explore. Where would you like to see it utilised?

This article has been adapted from a piece on Sony.net. The original can be found here: https://www.sony.net/SonyInfo/technology/stories/AI_Sound_Separation/

You must be a registered user to add a comment here. If you've already registered, please log in. If you haven't registered yet, please register and log in.